Does teaching philosophy to children improve their reading, writing and mathematics achievement? (Guest post by @mjinglis)

July 14, 2015I’ve been getting a bit concerned that the EEF’s evaluations of educational methods, which were meant to help provide a more solid evidence base for teaching, are actually leading to the same sort of unreliable research and hype that we have seen all too often in educational research. The following guest post is by Matthew Inglis (@mjinglis) who kindly offered to comment on a big problem with the recent, widely-reported study showing the effectiveness of Philosophy for Children (P4C).

On Friday the Independent newspaper tweeted that the “best way to boost children’s maths scores” is to “teach them philosophy”. A highly implausible claim one might think: surely teaching them mathematics would be better? The study which gave rise to this remarkable headline was conducted by Stephen Gorard, Nadia Siddiqui and Beng Huat See of Durham University. Funded by the Education Endowment Foundation (EEF), they conducted a year-long investigation of the ‘Philosophy for Children’ (P4C) teaching programme. The children who participated in P4C engaged in group dialogues on important philosophical issues – the nature of truth, fairness, friendship and so on.

I have a lot of respect for philosophy and philosophers. Although it is not my main area of interest, I regularly attend philosophy conferences, I have active collaborations with a number of philosophers, and I’ve published papers in philosophy journals and edited volumes. Encouraging children to engage in philosophical conversations sounds like a good idea to me. But could it really improve their reading, writing and mathematics achievement? Let alone be the best way of doing this? Let’s look at the evidence Gorard and colleagues presented.

Gorard and his team recruited 48 schools to participate in their study. About half were randomly allocated to the intervention: they received the P4C programme. The others formed the control group. The primary outcome measures were Key Stage 1 and 2 results for reading, writing and mathematics. Because different tests were used at KS1 and KS2, the researchers standardised the scores from each test so that they had a mean of 0 and a standard deviation of 1.

The researchers reported that the intervention had yielded greater gains for the treatment group than the control group, with effect sizes of g = +0.12, +0.03 and +0.10 for reading, writing and mathematics respectively. In other words the rate of improvement was around a tenth of a standard deviation greater in the treatment group than in the control group. These effect sizes are trivially small, but the sample was extremely large (N = 1529) , so perhaps they are meaningful. But before we start to worry about issues of statistical significance*, we need to take a look at the data. I’ve plotted the means of the groups here.

Any researcher who sees these graphs should immediately spot a rather large problem: there were substantial group differences at pre-test. In other words the process of allocating students to groups, by randomising at the school level, did not result in equivalent groups.

Why is this a problem? Because of a well known statistical phenomenon called regression to the mean. If a variable is more extreme on its first measurement, then it will tend to be closer to the mean on its second measurement. This is a general phenomenon that will occur any time two successive measurements of the same variable are taken.

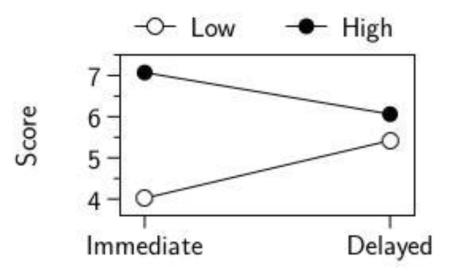

Here’s an example from one of my own research studies (Hodds, Alcock & Inglis, 2014, Experiment 3). We took two achievement measurements after an educational intervention (the details don’t really matter), one immediately and one two weeks later. Here I’ve split the group of participants into two – a high-achieving group and a low-achieving group – based on their scores on the immediate post test.

As you can see, the high achievers in the immediate post test performed worse in the delayed post test, and the low achievers performed better. Both groups regressed towards the mean. In this case we can be absolutely sure that the low achieving group’s ‘improvement’ wasn’t due to an intervention because there wasn’t one: the intervention took place before the first measurement.

Regression to the mean is a threat to validity whenever two groups differ on a pre-test. And, unfortunately for Gorard and colleagues, their treatment group performed quite a bit worse than their control group at pre-test. So the treatment group was always going to regress upwards, and the control group was always going to regress downwards. It was inevitable that there would be a between-groups difference in gain scores, simply because there was a between-groups difference on the pre-test.

So what can we conclude from this study? Very little. Given the pre-test scores, if the P4C intervention had no effect whatsoever on reading, writing or mathematics, then this pattern of data is exactly what we would expect to see.

What is most curious about this incident is that this obvious account of the data was not presented as a possible (let alone a highly probable) explanation in the final report, or in any of the EEF press releases about the study. Instead, the Director of the EEF was quoted as saying “It’s absolutely brilliant that today’s results give us evidence of [P4C]’s positive impact on primary pupils’ maths and reading results”, and Stephen Gorard remarked that “these philosophy sessions can have a positive impact on pupils’ maths, reading and perhaps their writing skills.” Neither of these claims is justified.

That such weak evidence can result in a national newspaper reporting that the “best way to boost children’s maths scores” is to “teach them philosophy” should be of concern to everyone who cares about education research and its use in schools. The EEF ought to pause and reflect on the effectiveness of their peer review system and on whether they include sufficient caveats in their press releases.

*The comment about “statistical significance” reflects additional concerns others had expressed about the methodology, for instance: here.

Update (6/3/2021): The results of the larger and more expensive trial have now come out. It found:

- There is no evidence that P4C had an impact on reading outcomes on average for KS2 pupils from disadvantaged backgrounds (i.e. FSM eligible pupils). This result has a high security rating.

- Similarly, there is no evidence that P4C had an impact on reading attainment at KS2 for the whole cohort of Year 6 pupils. There is also no evidence that P4C had an impact on attainment in maths for KS2 pupils – either for the whole cohort, or for pupils from disadvantaged backgrounds.

I doubt that many, if any, of our younger journalists have even heard of regression to mean, let alone have the ability to spot it from the given data.

I must admit I hadn’t read the paper but am staggered to see the charts here. Is there any analysis of school-level attrition in the study that explains why this happened or was it just unlucky randomisation on a relatively small sample? There are a number of standard approaches to ensure balanced, randomised samples. Given they were just using KS data, they could have retained school drop-outs in the analysis and estimated an intention to treat.

From the report: “Schools were randomised to two groups. A set of 48 random numbers was created, 22 representing intervention and 26 representing control schools, and then allocated to the list of participating schools in alphabetical sequence. Randomisation was conducted openly by the lead evaluator based on a list of schools and witnessed by colleagues.”

There is no school-level attrition.

I guess there are two issues:

(1) one favoured approach to randomising with small samples is to create matched pairs of schools and then randomise within the pairs. This should avoid unbalanced samples.

(2) but in this case they probably didn’t have the ‘pre’ data at the point of randomisation. Perhaps they didn’t know how unbalanced the samples were until after the experiment had started.

I’d like to see the treated schools re-matched into a sample of non-treated schools with similar pre-characteristics. Not an RCT, but might give us a clue as to how much the results stand up to the regression to the mean problem.

I just cannot understand why the EEF do this. The comment that the result is ‘absolutely brilliant’ seems to indicate a perhaps innappropriate emotional engagement with the result – but why?

[…] The problems with the statistics have been written about in greater detail here and also here. […]

I agree that some basic philosophy as you described is a good idea, but I expect that it’ll be used as another time-wasting distraction. Anything, anything at all, rather than allow the explicit teaching of reading, writing and counting. The expression “opportunity cost” springs to mind.

Maybe I am being cynical.

[…] Matthew Inglis @mjinglis (click here) makes the same point more elegantly with this graph of the three scores before and after the […]

Hi there, do you have a reference for the Gorard et al paper, I am having problems locating it and would like to read it in full. Thanks.

One of the things that makes me sad about this is that EEF are meant to be the people who are *good* at this sort of thing; overall, they are better at ed. research stats than many. And yet things like this still get through.

The difference between the control and intervention groups ought to have been a problem. In addition to that, the uncertainties are pretty big. Eyeballing the graphs, I don’t think it would be difficult to draw horizontal lines between the “pre” and “post” points; in other words, no effect at all.

It’s important that people like yourself, with a grasp of statistics, actually continue to point out the flaws in this kind of ‘evidence’. You should assume that the general teaching population does not understand at all. These are people who still think we can make percentages of pupils making amounts of progress, when the group size is less than ten.

They’re not people who are ‘good’ at this sort of thing. The people who wrote this report have degrees in Psychology, Geography and Medicine. The rest of the EEF equally have no training in Statistics.

I was really pleased to see the results from the evaluation of P4C as I had read other studies that suggested this could be an effective intervention and it fits with the research on oral language as a predictor of later reading scores. I was therefore dismayed to read this post, which appears to demonstrate that the only effect the study measured was a statistical anomaly. However, I am struggling to understand how this study demonstrates regression to mean because from reading the link you sent this experiment does not fit with the criteria i.e. the samples are not non-randomly selected and the means are not a long way from the population mean. My knowledge of stats is a bit shaky so I am hoping you can help me understand this by addressing my two questions below:

1) In both the treatment and control group for reading, the sample means are very close to the population mean of zero (-0.08 and +0.08). Taking the correlation between KS1 and KS2 as 0.75 (this varies from 0.69 up to 0.84 on the few papers I read), I calculate the regression to the mean (if the intervention had no effect) would be a 25% move towards 0. This would lead to the treatment group’s mean on post test moving 0.015 points towards zero, therefore if the intervention had no effect wouldn’t you expect the post test mean to be around -0.07, and not -0.02 as reported?

2) In the example you present from your own paper, you have intentionally sampled the high and low achievers and therefore the means for these two groups would differ significantly from the population mean (as you have sampled from either end of the normal distribution). How can this example therefore be comparable to the P4C data as the samples were random (and pretest sample means are close to the population mean) whereas the samples you present were non-random and by their very nature their means cannot be close to the population mean (e.g. high and low scores)?

I am sure I am missing something simple and obvious, but as a professional in training who will be recommending interventions to schools soon, I really need to understand what these findings really mean and therefore I would appreciate any further explanation you can offer to show where my thinking is wrong.

Thanks.

Hi Juliet

Thanks for your comment. A few quick responses:

(i) The random allocation here was done at the school level rather than the participant level, so there wasn’t randomisation in the sense described at the Research Methods Knowledge Base. (And, even when genuine participant-level randomisation happens, you can still be unlucky and get poorly matched groups, in which case you still need to be worried about regression to the mean.)

(ii) You say that the reading pre-test means are close to the population mean, which they are in some sense, but they’re not in another. For instance, they are both significantly different to it (ps=.023), and are significantly different to each other (p=.002).

(iii) It’s hard to know what the size of any regression to the mean would be here, but suppose you’re right that it would reduce the overall effects by .03 (both groups regress of course). A rough and ready analysis suggests that this is plenty large enough to abolish all the effects (in the sense that they would no longer reach the conventional level of statistical significance). For instance the p from a t-test with gain score means of +0.045 and -0.035 and SDs of 0.88 and 0.91, yields t(1527)=1.75, p=.08. Such a large p coupled with such a large sample size is much more compatible with the null hypothesis of no effect than any sensible alternative hypothesis. See, for instance, http://daniellakens.blogspot.nl/2014/05/the-probability-of-p-values-as-function.html So there’s no good reason to suppose that these data aren’t simply random noise plus a bit of regression to the mean.

(iiii) Yes, you’re quite right that the example from my paper isn’t directly analogous (although, as I note above, the two groups in this trial also differ significantly from the overall mean). I thought it would be helpful to include it as it shows what regression to the mean can do to unbalanced groups in the case where we know for certain that there was no intervention.

(v) Even if regression to the mean wasn’t a problem at all, you should be sceptical of these results because, as Jim Thornton astutely points out in his blog, the researchers failed to follow their own trial protocol. So all the discussions here are about post-hoc analyses, not the pre-specified analyses (none of which show any effects) . See:

(vi) Finally, none of this is to say that P4C isn’t a valuable thing to do. As I mention in the post, teaching philosophy to children sounds like a great idea to me.

Hope that helps.

Hi Matthew, I really appreciate the time you have taken to respond. Although I will need some time to explore your points as I am a relative novice to this level of statistical analysis and the debates around the relevance or not of p values as well as effect sizes (which is what I am encouraged to focus on). I really do hope this evaluation is actually submitted as a research paper in a peer review journal as this will invite a thorough critique (hopefully). The other blog post you signpost is excellent further reading on this and demonstrates you are not the only one with concerns. I note that Stephen Gorard has referred critics to the security values in the appendix of the report, which suggest moderate security in the findings – but again I am not sure what this adds in terms of the points you raise. I hadn’t thought about randomisation at school level, considering the unit of measurement is individual results. Still not sure I understand the implications for this in terms of regression to the mean, but you have given more to explore. Cheers.

Reblogged this on The Echo Chamber.

It’s a pretty established fact that any intervention (even just telling kids they’re part of a trial of the latest teaching method) has a short term positive effect.

Was that controlled for with the control groups? Were they given a placebo intervention or were they just monitored without being aware they were part of the trial?

I also don’t think that regression to the mean is the problem here; I think it’s that the results aren’t statistically significant.

Why isn’t it regression to the mean? As Juliet points out above, regression to the mean occurs when we select groups from a subset of the distribution (i.e. selecting all the low/high scorers). This isn’t what’s happened here; the control group and the treatment group are meant to be random samples from the population.

Looking quickly at the graphs above I would have estimated that the results were statistically significant. The error bars are a lot smaller than the gap between the means (not a perfect test, but a good rule of thumb). But those error bars are actually far too small. I suspect the calculation used here for the error bars was the sample standard deviation divided by the square of the size of the sample (a.k.a “standard error”). This calculation would be correct if each child were an independent sample, but they’re not – they’re grouped by school, and this makes a large difference.

I’m not a statistician and I’m happy to be corrected on the following calculations, but I believe we can calculate the true standard error as follows. Because the samples aren’t independent we have effectively reduced the size of the sample. See here (http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1466680/) for more explanation of effective sample size and the related concept of design effect, and here (http://surveyanalysis.org/wiki/Design_Effects_and_Effective_Sample_Size) for an explanation of using this to correct the variance. Using the formula in the first link I calculated an effective sample size of 114 for the control and 99 for the treatment, and respective design effects or 6.6 and 7.8 (making the slightly dubious assumption that all the schools had the same number of pupils, but this shouldn’t have too much of an effect). Using these we get standard errors of 0.24 and 0.28 respectively. So the error bars on the graph should be about six times larger.

We could go on and calculate a significance value for the difference between the groups, but it probably wouldn’t be worth it – the error bars are far larger than the difference between the means, and the result is unlikely to be significant.

Interestingly, I think we could have gleaned this insight without all the calculations. The minimum effect size the experiment can detect is reported in the table on page 41 as between 0.3 and 0.4. But the largest effect size (on the main measures, not the FSM subgroup analyses) is 0.12.

Conclusion: I think their result is likely to be noise.

forget the bad *science*, people.

this isn’t even good *philosophy*.

[…] caused the most discussion, mainly because of some crucial aspects in the design. There was a guest post by Inglis, and this […]

I have no doubt that the findings of this report can be questioned and as ever, with every piece investigation into learning, results are susceptible to scrutiny.

I think however that there appears to be a misunderstanding of what P4C actually is. P4C is NOT the teaching of philosophy as some seem to imply. Children are not being taught Plato and Socrates. Instead the focus is on dialogic teaching – encouraging children to use exploratory dialogue in the classroom – to empower children and students in an education system which is objectives focused. P4C is not an intervention strategy to improve maths, reading and literacy (although improvements in these may be an effect) it is instead designed to prepare children and communities for their futures as critical citizens in a challenging society.

[…] thinking on this. EEF conducted an RCT of a course known as ‘philosophy for children’. There has been much comment on this with some people claiming that it demonstrates regression to the mean. However, let’s […]

[…] study, the Accelerated Reader study, and the P4C study (which has been criticised by others for its statistical weakness and poor interpretation). Defending that funding stream could be construed as a vested interest. […]

[…] guest post about some very misleading research on P4C that was widely […]

How to explain the drop in the control group, other than regression to the mean or sheer randomness? The control group should stay the same, or it pretty much shows it isn’t a very good control.

So we have a technique, P4C, that on the face of it has no mechanism for changing maths scores, which has significant affects on maths scores. Yet this raises no red flags?

If something seems too good to be true then it almost always is not true, except in education theory it seems.

[…] the children of very short parents are likely to be taller than their parents. (This also came up here.) Extreme measurements are not easily repeated, even when the first measurement is likely to be […]

[…] I looked at the EEF research on the impact of summer schools. It barely mentions the curriculum used as a likely explanation for variable outcomes of previous research. Similar problems are apparent in the EEF research on the programme ‘Philosophy for Kids’. Again the literature review is very brief. In particular the literature reviewer seemed unaware of the highly relevant and voluminous research in cognitive psychology on the likelihood of ‘far transfer’ of knowledge (i.e. skills developed learning philosophy transferring to widely different ‘far’ contexts such as improvements in maths and literacy). The research design takes no account of this prior work in designing the study and the report writer seems blissfully unaware that if the findings were correct (that ‘Philosophy for Kids’ did have an impact on progress in reading and maths) the impact on a whole field of enquiry would be seismic, overturning the conclusion of many decades of research by scores of cognitive psychologists on the likelihood of this sort of ‘far transfer’. Surely under these circumstances advising that this programme ‘can’t do any harm’ without even considering why the findings are contrary to a whole field of research, is foolhardy? (To say nothing of the tiny effect sizes found and serious questions about whether results simply show ‘regression to the mean’.) […]

[…] The Philosophy for Children study. This has been much criticised and with good reason (see here and here). […]

[…] Others have suggested that it is an example of a well-known statistical artefact known as ‘regression to the mean‘, although the lead evaluator says they checked for […]

[…] Does teaching philosophy to children improve their reading, writing and mathematics achievement? […]

[…] not a huge fan of the Education Endowment Fund, partly because they’ve allowed some pretty shoddy research in the past, and partly because they have a history of being partisan on certain issues. However, they do fund […]

[…] bir hataya dayandığını, bulunan artışın Çifel etkisiyle olmadığını iddia edenler var.6 https://teachingbattleground.wordpress.com/2015/07/14/does-teaching-philosophy-to-children-improve-t… Yani belki de eski araştırmanın bulgularını kabul etmek baştan beri bir hataydı. Yeni […]

[…] I wasn’t the only one to smell a rat (see here, here and here). Professor Gorard, the lead evaluator, sent me a patronising email and blocked me on […]